- Acronym

- Posts

- BTS: When is it safe to trust AI?

BTS: When is it safe to trust AI?

The trust heatmap + more insights from Vasant Dhar’s ‘Thinking with Machines’

I’ve talked to y’all about generative AI’s truth problem before—that pesky issue where apps like ChatGPT hallucinate, or make stuff up, at concerningly frequent rates.

But AI is more than just chatbots. It’s also self-driving cars and courtroom reporters and a whole slew of technologies where errors can be costly and impact real peoples’ lives. In a new book called Thinking with Machines by Vasant Dhar, professor at NYU’s Stern School of Business and the Center for Data Science, we learn the intricacies of when—and perhaps more importantly, when not—to trust that AI will do what it’s supposed to do.

Read on after a quick ad. You can click it to help me offset the costs of this newsletter, share this pub with your homies or donate directly. 💌

Create how-to video guides fast and easy with AI

Tired of explaining the same thing over and over again to your colleagues?

It’s time to delegate that work to AI. Guidde is a GPT-powered tool that helps you explain the most complex tasks in seconds with AI-generated documentation.

1️⃣Share or embed your guide anywhere

2️⃣Turn boring documentation into stunning visual guides

3️⃣Save valuable time by creating video documentation 11x faster

Simply click capture on the browser extension and the app will automatically generate step-by-step video guides complete with visuals, voiceover and call to action.

The best part? The extension is 100% free

In Thinking with Machines (which features a foreword by Scott Galloway, also an NYU Stern professor, author and podcast host), Dhar proposed something he calls the trust heatmap.

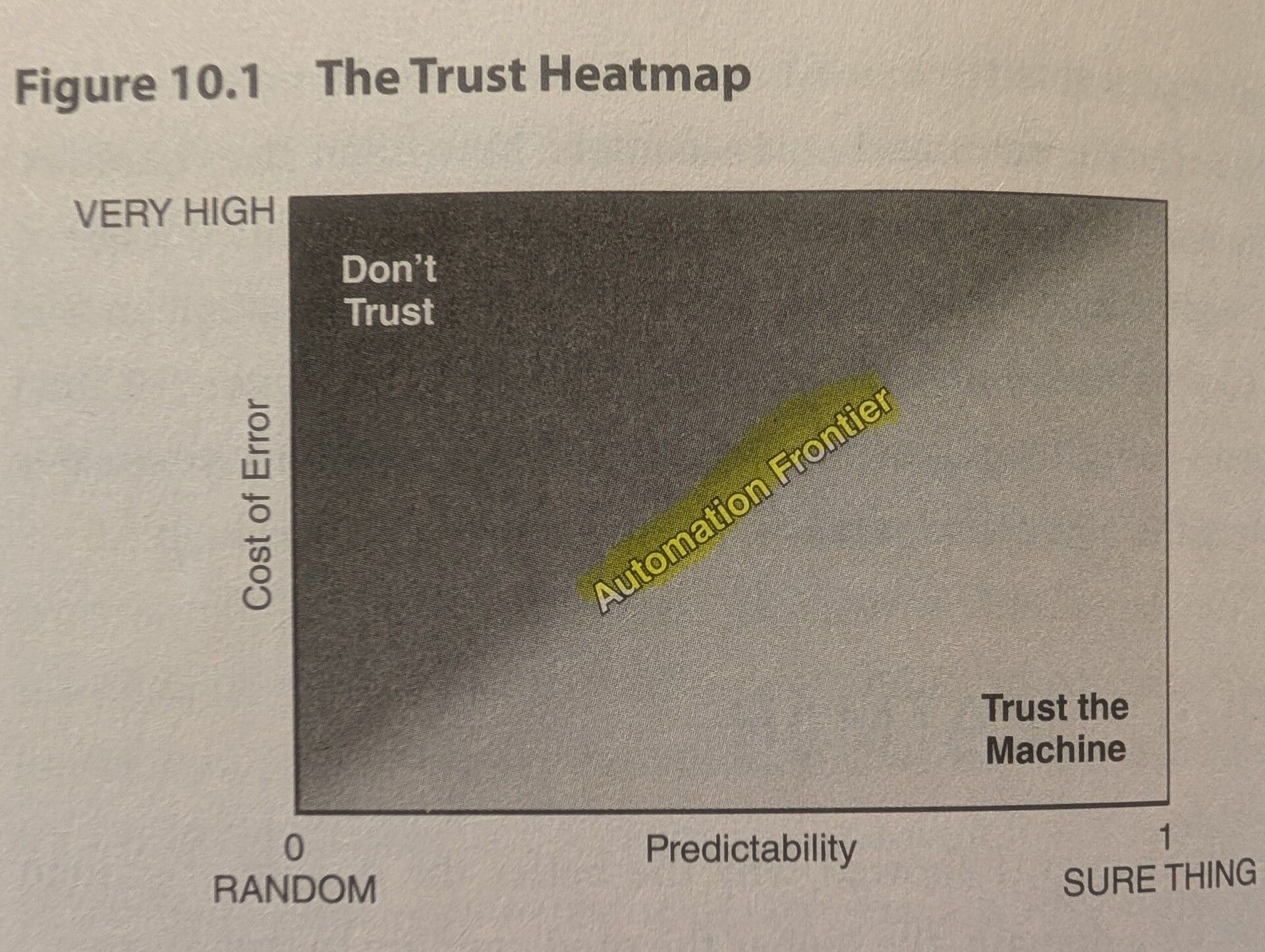

Snapshot of the trust heatmap from Thinking with Machines by Vasant Dhar

The trust heatmap helps us conceptualize whether or not to trust an AI-enabled technology that automates some previously human action or decision.

When we make the decision to trust an algorithm, we are deciding that the probability of an error is small enough to make the cost of a wrong decision tolerable; or alternatively, that the cost of errors is low enough to make us comfortable with a higher error rate.

The trust heatmap compares predictability (from totally random to a sure thing) and cost of error (from nil to very high). When an algorithm is totally certain to succeed and the cost of error is low, it makes sense to trust the machine. But at some point, as the predictability shrinks and/or the cost of error rises, we float backwards behind what Dhar calls the “automation frontier.”

Consider: Autonomous vehicles can have sensor errors and are not yet as good at humans at gathering information from the environment. Meanwhile, the cost of errors (potential loss of life) is massive.

But Dhar recognizes that “we may look back at 2025 as an inflection point for AI in transportation, when driverless cars started to attain more situational awareness.”

Factors like algorithmic predictability and cost of errors change over time. For example, chatbots that increase their so-called empathy to attract more users increase the risk of people developing delusional thinking and even psychosis (I’ve reported on this in more depth, including in this article where Dhar gave his insights on the mental health risks associated with chatbots).

Or as experts fine-tune chatbots, we might learn we can trust them to hallucinate less and come up with accurate answers more often.

Or the tendency for LLMs to inspire more homogenized thinking could make it harder for businesses relying on this tech for marketing to gain a competitive edge, thus increasing the cost of errors.

Ultimately, it’s the people behind AI—and the people using it—who remain responsible for the decisions an algorithm makes. We can’t put a code snippet in jail, after all. Learning when it’s worthwhile to let AI do its thing without major oversight, and when we’re better off keeping a human in the loop, will be crucial as our AI-enabled society develops.

Trust isn’t binary, but contextual.

You can find Vasant Dhar’s new book, Thinking with Machines, at all major retailers (but I’d recommend supporting indie booksellers via bookshop.org or your fave local bookshop, which can often order a copy just for you).

Gif by trutv on Giphy

Thanks,

Reply